刷题记录

- kmp 模式匹配

1 | class Solution { |

- 滑动窗口最大值

1 |

|

- 优先队列寻找前k个高频元素

1 |

|

1 | class Solution { |

1 |

|

1 |

|

Gin 是一个 golang 的 web 框架,封装比较优雅,API 友好,源码注释比较明确,具有快速灵活,容错方便等特点。它的主要作者是 Manu,Javier 和 Bo-Yi Wu,2016 年发布第一个版本,目前是最受欢迎的开源 Go 框架。

根据 Round 22 results - TechEmpower Framework Benchmarks 对现存的 web 框架的排名,Gin 框架以 95900 分的成绩位列第 185 名,与之相对的,业界常用的其他 web 框架比如 Spring 仅有 24082 分,位列第 381 名,可见 Gin 的效率远高于 spring 框架。

考虑到采用 go 进行开发时,其他排名靠前的 web 框架的生态并不如 Gin 完善,因此采用 Gin 是一个不错的选择。Gin 包含的组件和支持的功能如下:

| 组件 | 功能 |

|---|---|

| server | 作为 server,监听端口,接受请求 |

| router | 路由和分组路由,可以把请求路由到对应的处理函数 |

| middleware | 支持中间件,对外部发过来的 http 请求经过中间件处理,再给到对应的处理函数。例如 http 请求的日志记录、请求鉴权(比如校验 token)、CORS 支持、CSRF 校验等 |

| template engine | 模板引擎,支持后端代码对 html 模板里的内容做渲染(render),返回给前端渲染好的 html |

| Crash-free | 捕捉运行期处理 http 请求过程中的 panic 并且做 recover 操作,让服务一直可用 |

| JSON validation | 解析和验证 request 里的 JSON 内容,比如字段必填等。 |

| Error management | Gin 提供了一种简单的方式可以收集 http request 处理过程中的错误,最终中间件可以选择把这些错误写入到 log 文件、数据库或者发送到其它系统。 |

| Middleware Extendtable | 支持用户自定义中间件 |

现象描述:循环报错无法上传新版本服务端

故障原因:ssh链接使用的文件锁失效

解决方案:在本地ctrl+shift+p打开控制面板,输入Kill VS Code Server on Host,关闭连不上的服务器上面的vscode服务端。此时重新连接即可。

DEtection TRansformer (DETR) is a framework for object detection that views it as a direct set prediction problem, removing the need for hand-designed components and utilizing a transformer encoder-Decoder architecture to improve the accuracy and efficiency of object detection. Within two years, Detection Transformer (DETR) has undergone a remarkable transformation. This survey dissects key advancements, analyzes its current state, and ponders its future, revealing how DETR redefines object detection.

Key Words: Object Detection, Transformer, Detection Transformer

Object Detection refers to the task of automatically identifying and localizing objects within an image or video. It involves using computer vision techniques, such as deep learning models, to analyze and classify regions of an image that contain objects of interest. As a fundamental building block of computer vision, object detection has undergone a remarkable transformation in recent years. Early efforts relied on meticulously crafted features and laborious two-stage pipelines, struggling to achieve both accuracy and efficiency. However, the emergence of DETR (Detection Transformer) in 2020 marks a pivotal moment, introducing a novel paradigm that transcends limitations and unveils exciting possibilities for the future of object detection.

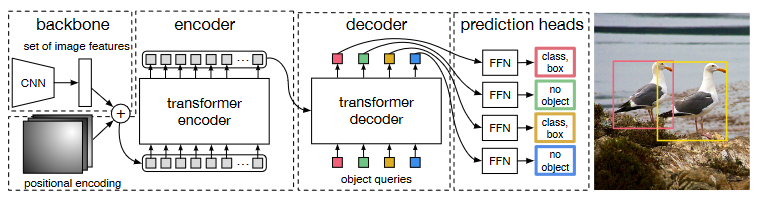

DETR views object detection as a set prediction problem and introduces a remarkably concise pipeline for object detection. It involves using a Convolutional Neural Network (CNN) to extract foundational features, which are then input into a Transformer for relationship modeling. The resulting output is matched with ground truth on the image using a bipartite graph matching algorithm. The detailed methodology of DETR is illustrated in the above diagram, and its key design inceptions include:

Modeling object detection as a set prediction problem:

DETR conceptualizes object detection as a set prediction problem. Instead of treating each object individually, DETR aims to predict the entire set of objects collectively. This global perspective is a departure from the conventional paradigm.

Bipartite Matching for Label Assignment:

To accomplish label assignment, DETR employs a bipartite matching strategy. This involves using the Hungarian algorithm, a combinatorial optimization algorithm, to determine the optimal matching between the predicted objects and the ground truth. This approach ensures effective and accurate label assignment.

Transformer-based Encoder-Decoder Structure:

DETR leverages the Transformer architecture with an encoder-Decoder structure. This choice transforms object detection into an end-to-end problem, eliminating the need for post-processing steps like Non-Maximum Suppression (NMS). The Transformer's attention mechanism enables global context understanding, contributing to improved detection accuracy.

Avoidance of handcrafted anchor priors:

Unlike traditional methods that rely on manually defined anchor priors, DETR avoids such handcrafted position prior information. This is achieved through its set-based approach, making the model more flexible and less dependent on predefined anchor boxes.

Background

目的:从背景信息中挑选对当前任务目标更关键的信息。

应用场景:序列数据处理

机制分类:自注意力机制、空间注意力机制、时间注意力机制。

Classificiation

点积自注意力机制

NLP中自注意力机制的计算步骤:

预处理输入数据X

初始化权重\(W_Q,W_K,W_V\)

计算K,Q,V矩阵(仅限于输入部分的编码过程,encoder输出到decoder时的QKV不通过该方式计算)

\[ \begin{cases}K=XW_K\\Q=XW_Q\\V=XW_V\end{cases} \]

计算注意力得分:\(softmax(\frac{QK^T}{\sqrt{d_k}})\),然后再和V相乘

得到自注意力矩阵\(softmax(\frac{QK^T}{\sqrt{d_k}})V\)

其中,输入矩阵X通过乘以对应权重会生成对应的QKV矩阵,分别表征:

然后,我们关注一下注意力得分的计算公式:

首先是Q和\(K^T\)的内积,这一项的意义在于度量Q和K两个向量的相似度。

考虑一组简单的二维向量:

1 | curl -X POST -d "DDDDD=【学号】&upass=【密码】&0MKKey=" 【校园网登录页】 |

clash GUI安装教程:Clash安装教程Win10,Linux. Windows版 | by van-der-Poel | Medium

clash 命令行使用方法:GLaDOS

安装好后,cd clash然后执行以下指令启动screen,在机器上运行一个命令行代理程序:

1 | screen -S vpn |

然后设置终端走的代理:

1 | vi ~/.bashrc |

添加以下两行:

1 | export http_proxy='http://localhost:[你设置的http代理端口]' |

这个端口有可能被占用,如果被占了就换一个,配置文件在~/clash/glados.yaml

测试: 1

wget www.google.com

有时候可能会开着clash,网络也连上了但是没网的情况,这时候得重置一下代理端口:

可以查看当前系统中的代理设置,http_proxy/https_proxy这两个设置项应该是小写的:

1 | env | grep -i proxy |

如果和clash端口号对不上的话就清掉:

1 | unset http_proxy |

然后再手动设置或者持久化到~/.bashrc里,7892换成glados.yaml里面规定的端口:

1 | export http_proxy=http://127.0.0.1:7892 |

使用hexo+git pages搭建,搭建过程参考以下文章:

(13 封私信) 博客 hexo - 搜索结果 - 知乎 (zhihu.com)

Hexo+Next主题搭建个人博客+优化全过程(完整详细版) - 知乎 (zhihu.com)

图片插入问题

在配置了相对资源路径后,如果直接使用md文件的插入方法会导致预览界面显示不出来图片。通过插件引入方式可以解决该问题:

1

{% asset_img [img_name] [description] %}

公式渲染问题:

Hexo显示latex公式 - 知乎 (zhihu.com)

记得要在本地下载配置pandoc

配置完毕后,在md前面的注释处加上mathjax: true即可。

代码块显示:

参考以下文章: https://hexo.io/zh-cn/docs/syntax-highlight.html

通过以下方式修改next主题的配置文件可以在代码块外面显示一键复制:

1

2

3

4

5

6

7

8

9

10

11

12codeblock:

# Code Highlight theme

# Available values: normal | night | night eighties | night blue | night bright | solarized | solarized dark | galactic

# See: https://github.com/chriskempson/tomorrow-theme

highlight_theme: normal

# Add copy button on codeblock

copy_button:

enable: true

# Show text copy result.

show_result: true

# Available values: default | flat | mac

style:

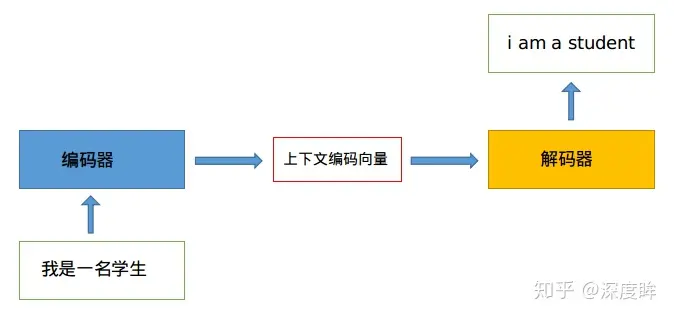

序列转序列结构常用于机器翻译,其分为编码器和解码器两部分。其中,编码器负责将输入文本转译为上下文编码向量,解码器负责对其进行解码,翻译为目标语言。

该上下文编码向量长度一般是固定的,导致模型对于变长输入文本的处理能力不一致。为了提升模型对于该序列的利用能力,学界引入了注意力机制根据输入序列的不同部分为其赋予不同的注意力权重。

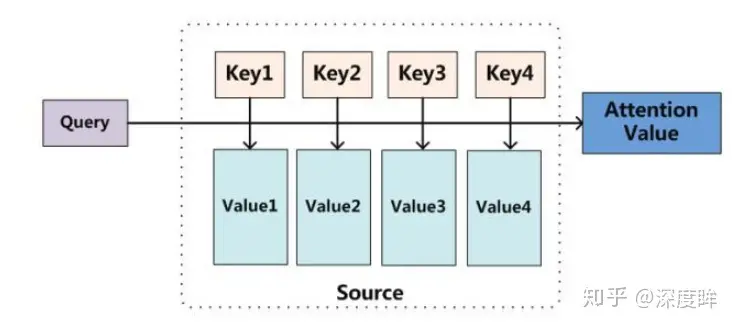

对注意力机制进行抽象,可以归结为Q,K,V三个矩阵的计算,如下图所示:

一个注意力层会在内部维护q,k,v三个权重矩阵,分别对应查询权重、键权重、值权重三方面权重。将输入序列向量与q,k,v矩阵分别相乘,就得到了Q(Query,查询向量,包含当前位置的输出序列信息,用于计算注意力权重),K(Keys,键向量,包含序列中每个位置的信息,用于计算注意力权重),V(Values,值向量,包含输入序列中每个位置的具体数值信息,用于根据注意力权重计算输出的上下文向量值)三个向量。